TF2.keras 实现基于卷积神经网络的图像分类模型

Keras 实现卷积神经网络

# 导入包

import matplotlib as mpl

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

import tensorflow as tf

from tensorflow import keras

# tensorflow: V2.1.0

# 数据集、分集

fashion_mnist = keras.datasets.fashion_mnist

(x_train_all, y_train_all), (x_test, y_test) = fashion_mnist.load_data()

x_valid, x_train = x_train_all[:5000], x_train_all[5000:]

y_valid, y_train = y_train_all[:5000], y_train_all[5000:]

print(x_valid.shape, y_valid.shape)

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

# 归一化

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

x_train_scaled = scaler.fit_transform(

x_train.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28, 1)

x_valid_scaled = scaler.transform(

x_valid.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28, 1)

x_test_scaled = scaler.transform(

x_test.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28, 1)

# 神经网络模型

model = keras.models.Sequential()

# 卷积层

model.add(keras.layers.Conv2D(filters=32, kernel_size=3,

padding='same',

activation='relu',

input_shape=(28, 28, 1)))

# 卷积层

model.add(keras.layers.Conv2D(filters=32, kernel_size=3,

padding='same',

activation='relu'))

# 池化层

model.add(keras.layers.MaxPool2D(pool_size=2))

# 卷积层

model.add(keras.layers.Conv2D(filters=64, kernel_size=3,

padding='same',

activation='relu'))

# 卷积层

model.add(keras.layers.Conv2D(filters=64, kernel_size=3,

padding='same',

activation='relu'))

# 池化层

model.add(keras.layers.MaxPool2D(pool_size=2))

# 卷积层

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu'))

# 卷积层

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='relu'))

# 池化层

model.add(keras.layers.MaxPool2D(pool_size=2))

# 展平

model.add(keras.layers.Flatten())

# 全连接层

model.add(keras.layers.Dense(128, activation='relu'))

# 输出层

model.add(keras.layers.Dense(10, activation="softmax"))

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

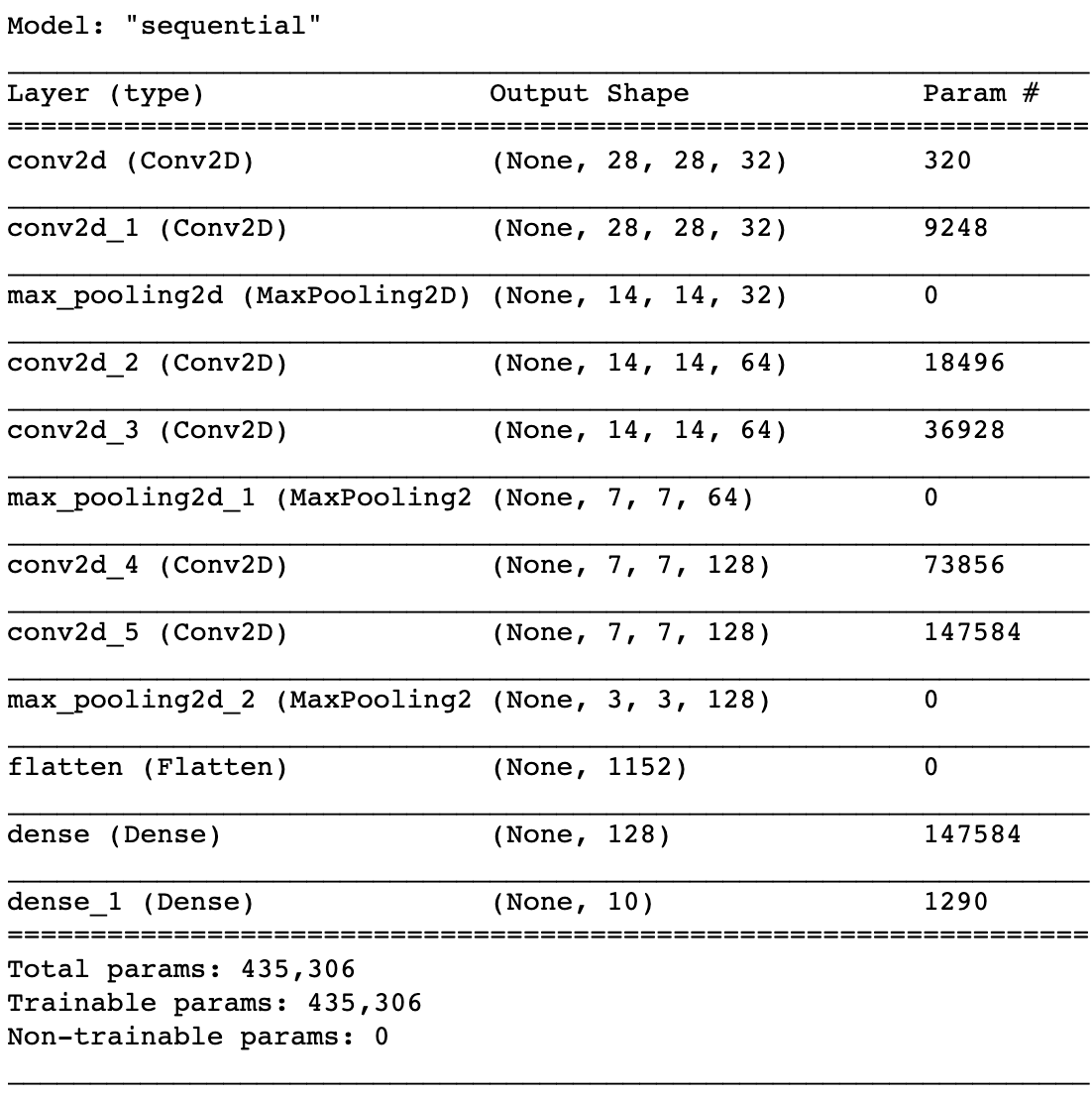

metrics = ["accuracy"])model.summary():

# 训练模型

logdir = './cnn-callbacks'

if not os.path.exists(logdir):

os.mkdir(logdir)

output_model_file = os.path.join(logdir,

"fashion_mnist_model.h5")

callbacks = [

keras.callbacks.TensorBoard(logdir),

keras.callbacks.ModelCheckpoint(output_model_file,

save_best_only = True),

keras.callbacks.EarlyStopping(patience=5, min_delta=1e-3)

]

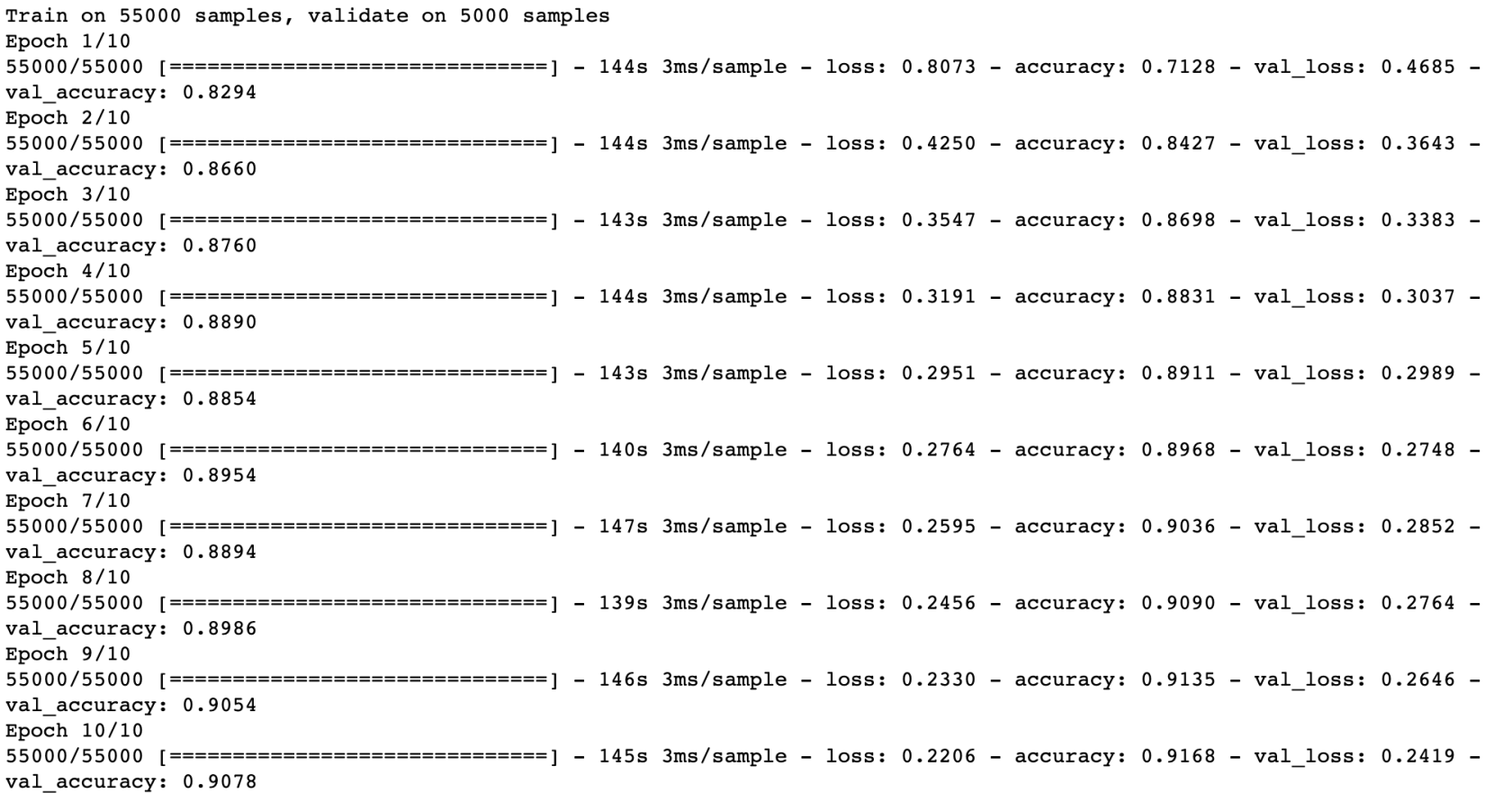

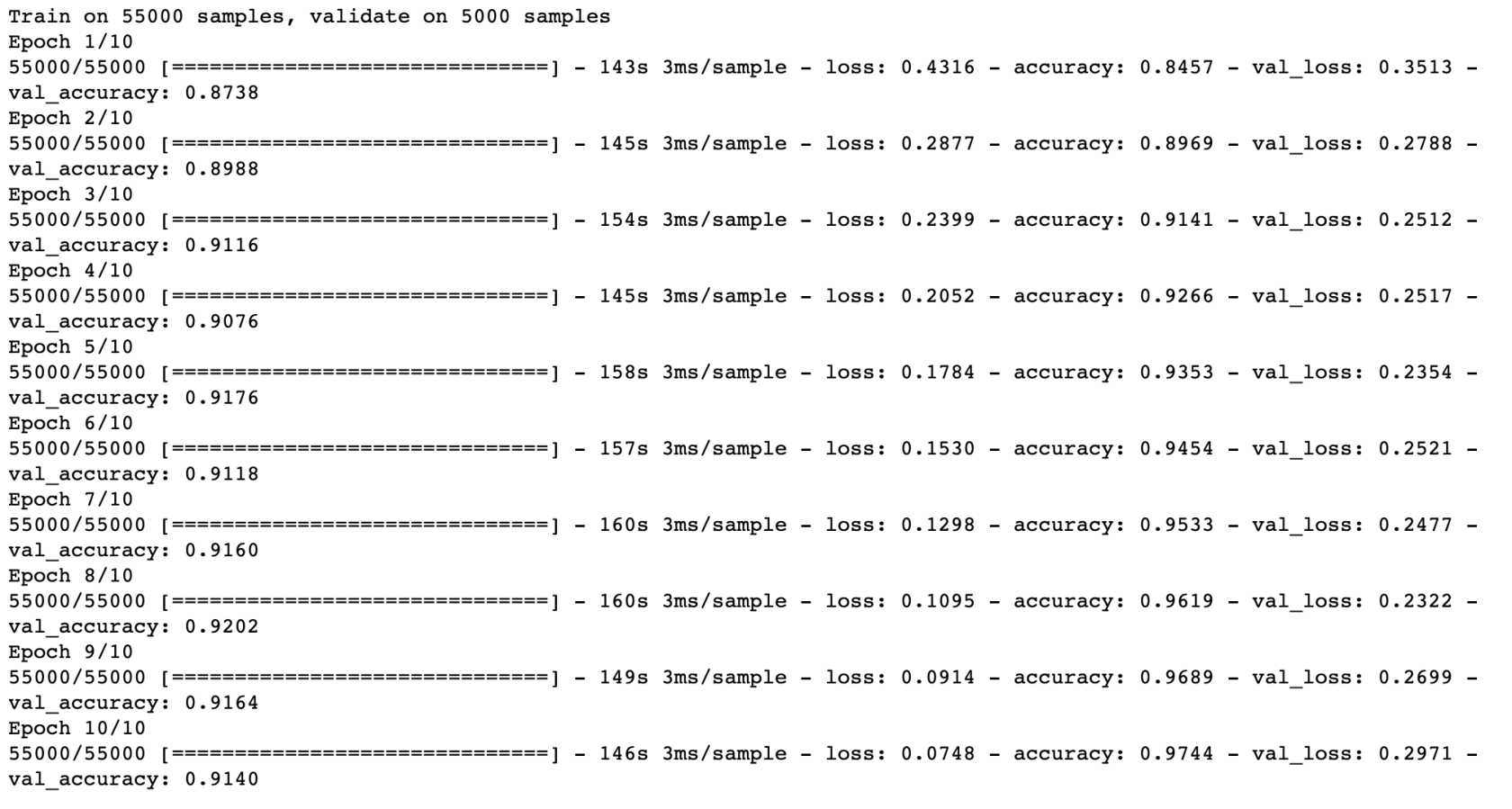

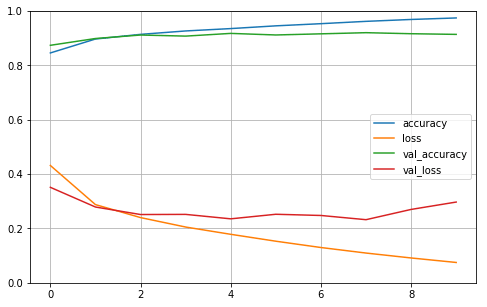

history = model.fit(x_train_scaled, y_train, epochs=10,

validation_data=(x_valid_scaled, y_valid),

callbacks = callbacks)经过了几分钟的训练:

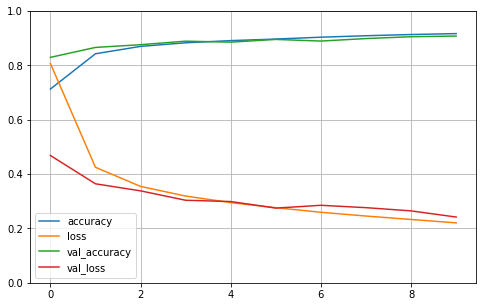

# 学习曲线图

def plot_learning_curves(history):

pd.DataFrame(history.history).plot(figsize=(8, 5))

plt.grid(True)

plt.gca().set_ylim(0, 1)

plot_learning_curves(history)

# 测试集验证

model.evaluate(x_test_scaled, y_test)输出:

10000/10000 [==============================] - 4s 393us/sample - loss: 0.2668 - accuracy: 0.9028

[0.2668113784730434, 0.9028]

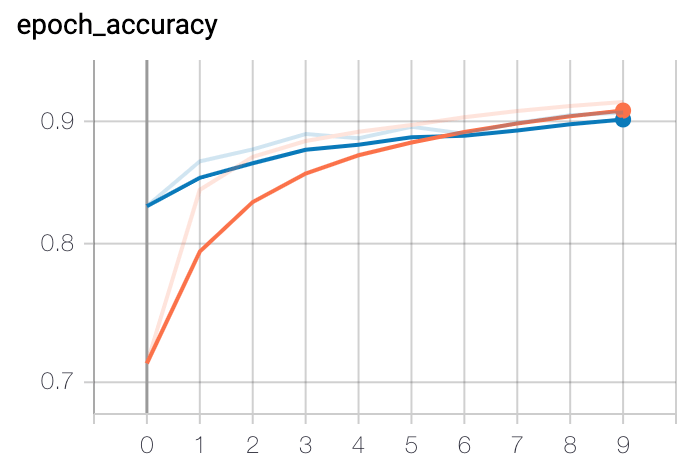

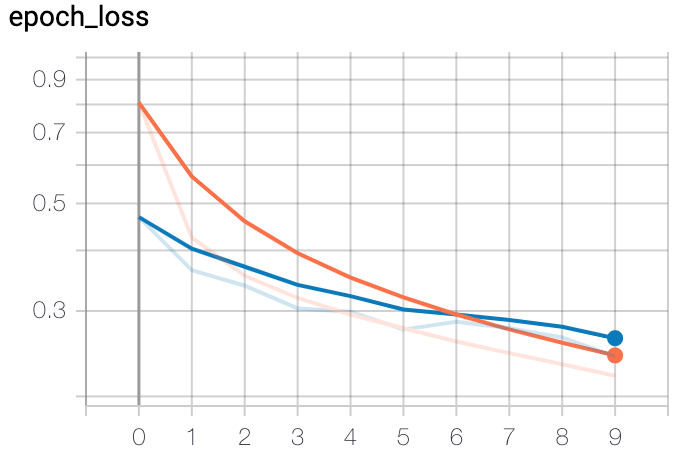

$ tensorboard --logdir=cnn-callbacks/

需要注意以上每池化后的层级的

filters都翻了 2 倍,这是因为池化大小为 2。

以上激活函数用的是ReLU,可以试试SeLU:

model = keras.models.Sequential()

# 卷积层

model.add(keras.layers.Conv2D(filters=32, kernel_size=3,

padding='same',

activation='selu',

input_shape=(28, 28, 1)))

# 卷积层

model.add(keras.layers.Conv2D(filters=32, kernel_size=3,

padding='same',

activation='selu'))

# 池化层

model.add(keras.layers.MaxPool2D(pool_size=2))

# 卷积层

model.add(keras.layers.Conv2D(filters=64, kernel_size=3,

padding='same',

activation='selu'))

# 卷积层

model.add(keras.layers.Conv2D(filters=64, kernel_size=3,

padding='same',

activation='selu'))

# 池化层

model.add(keras.layers.MaxPool2D(pool_size=2))

# 卷积层

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='selu'))

# 卷积层

model.add(keras.layers.Conv2D(filters=128, kernel_size=3,

padding='same',

activation='selu'))

# 池化层

model.add(keras.layers.MaxPool2D(pool_size=2))

# 展平

model.add(keras.layers.Flatten())

# 全连接层

model.add(keras.layers.Dense(128, activation='selu'))

model.add(keras.layers.Dense(10, activation="softmax"))

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

metrics = ["accuracy"])SeLU训练结果:

model.evaluate(x_test_scaled, y_test):

10000/10000 [==============================] - 4s 401us/sample - loss: 0.3093 - accuracy: 0.9099

[0.30925562893152236, 0.9099]

本作品采用《CC 协议》,转载必须注明作者和本文链接

关于 LearnKu

关于 LearnKu