GitHub 是如何做好 MySQL 的高可用性的?

Github 使用MySQL数据库作为所有非git事务的数据存储。数据库的可用性对Github的正常运行而言至关重要。无论是Github网站本身,还是Github API,身份验证服务等都需要访问数据库。Github运行了多个数据库集群用于支撑不同的服务于任务。数据库的架构采用的是传统的主从结构,集群中一个节点(主库)支持写访问,其余的节点(从库)同步主库的变更,支持读服务。

主库的可用性至关重要。一旦主库宕机,集群将不能够支持数据写入服务:任何需要保存的数据都无法写入到数据库保存。最终导致Github上任何变更,例如代码提交,提问,用户创建,代码review,创建仓库等操作都无法完成。

为了保证业务的正常运行,我们自然需要在集群中有一个可用的支持写入的数据库节点。同时,我们也必须能够快速的发现可用的可写入服务数据库节点。

就是说,在异常情况下,假如主库宕机的场景,我们必须确保新的主库能够立刻上线支持服务,同时保证集群中其他节点能够快速识别到新的主库。故障检测,主库迁移以及集群其他数据节点识别新主库的总时间构成了服务中断的总时间。

这篇文章说明了GitHub的MySQL高可用性和主库服务发现解决方案,该解决方案使我们能够可靠地运行跨数据中心的操作,能够容忍数据中心的隔离,并缩短在出现故障时停机时间。

高可用性的实现

本篇文章描述的解决方案是在以前Github高可用方案上的改进版本。正如前面说到的一样,MySQL的高可用策略必须适应业务的变化。我们期望MySQL以及GitHub上其他的服务都有能够应对变化的高可用解决方案。

当设计高可用以及服务发现系统方案的时候,从下面几个问题出发,也许能够帮助我们快速找到合适的解决方案:

- 最大允许的服务中断的时间是多少?

- 服务中断检测的准确性怎么样?是否能够允许服务中断检测误报(会导致过早故障转移)?

- 故障转移的可靠性怎么样?什么情况会导致故障转移失败?

- 这个方案能否在跨数据中心实现,以及如何实现的? 在不同的网络状况下会怎么样,延迟高,或延迟低的情况会怎么样?

- 这个解决方案能否承受整个数据中心(DC)的故障 或者网络隔离的情况?

- 有什么机制防止HA集群脑裂情况(在一个整体的系统,联系着的两个节点分裂为两个独立节点,这两个节点争抢共享资源写入数据的情况)?

- 能否容忍数据丢失?容忍丢失程度是多少?

为了说明上面的几个问题,我们先来看一下我们之前的高可用方案,以及我们为什么要改进它。

摒弃基于VIP和DNS的发现机制

在之前的方案中,应用了下面的技术方案:

- 使用orchestrator作为故障检测迁移方案。

- 采用VIP和DNS方式作为主节点发现方案。

客户端通过节点名称,例如mysql-writer-1.github.net,解析成主节点的虚拟IP地址(VIP),从而找到主节点。

因此,正常情况下,客户端可以通过对节点名称的解析,连接到对应IP的主节点上。

考虑夸三个数据中心的拓扑结构的情况:

一旦主库异常,必须将其中的一个数据副本服务器更新为主库服务器。

orchestrator 会检测异常,选出新的主库,然后重新分配数据库的名称以及虚拟IP(VIP)。客户端本身不知道主库的变更,客户端有的信息只是主库的名称,因此这个名称必须能够解析到新的主库服务器。考虑下面的问题:

VIP需要协商:虚拟IP由数据库本身所持有。 服务器必须发送ARP请求,才能够占有或释放VIP。 在新的数据库分配新的VIP之前,旧的服务器必须先释放其占有的VIP。这个过程会产生一些异常问题:

- 故障转移的顺序,首先是请求故障机器释放VIP,然后联系新的主库机器分配VIP。但是,如果故障机器本身不能访问,或者说拒绝释放VIP呢? 考虑到机器故障的场景,故障机器不会立即响应或根本就不会响应释放VIP的请求,整个过程有下面两个问题:

- 脑裂情况:如果有两个主机持有相同的VIP的情况,不同的客户端根据最短的网络链路将会连接到不同的主机上。

- 整个VIP重新分配过程依赖两个独立服务器的相互协调,而且设置过程是不可靠的。

- 即使故障机器正常释放VIP,整个流程也是非常耗时的,因为切换过程还需要连接故障机器。

- 即使VIP重新分配, 客户端已有的连接不会自动断开旧的故障机器,从而使得整个系统产生脑裂的情况。

在我们实际设置VIP时,VIP还受实际物理位置的约束。这主要取决于交换机或者路由器所在。因此,我们只能在同一本地服务器上重新分配VIP。特别是在某些情况下,我们无法将VIP分配给其他数据中心的服务器,而必须进行DNS更改。

- DNS更改需要更长的时间传播。客户端缓存DNS名称会先配置时间。跨平台故障转移意味着需要更多的中断时间:客户端需要花费更多时间去识别新的主服务器。

仅这些限制就足以推动我们寻找新的解决方案,但需要考虑的是:

-

主服务器使用

pt-heartbeat服务去自注入访问心跳,目的是延迟测量和节流控制。该服务必须在新的主服务器开始。如果可以,在更换主服务器的同时会关闭旧的主服务器这项服务。 -

同样地,Pseudo-GTID 是由服务器自行管理的。它需要在新的主服务器开始,最好在旧的主服务器上停止。

-

新的主服务器将设置为可写入。如果可以的话,旧的主服务器将设置为

read_only(只读)。

这些额外的步骤是导致总停机时间的一个因素,并引入了它们自己的故障和摩擦。

解决方案是有效的,GitHub 已经成功地进行了 MySQL 故障转移,但是我们希望 HA 在以下方面有所改进:

- 与数据中心无关。

- 容忍数据中心故障。

- 删除不可靠的协作工作流。

- 减少总停机时间。

- 尽可能的,有无损的故障切换。

GitHub 的高可用解决方案:orchestrator ,Consul , GLB

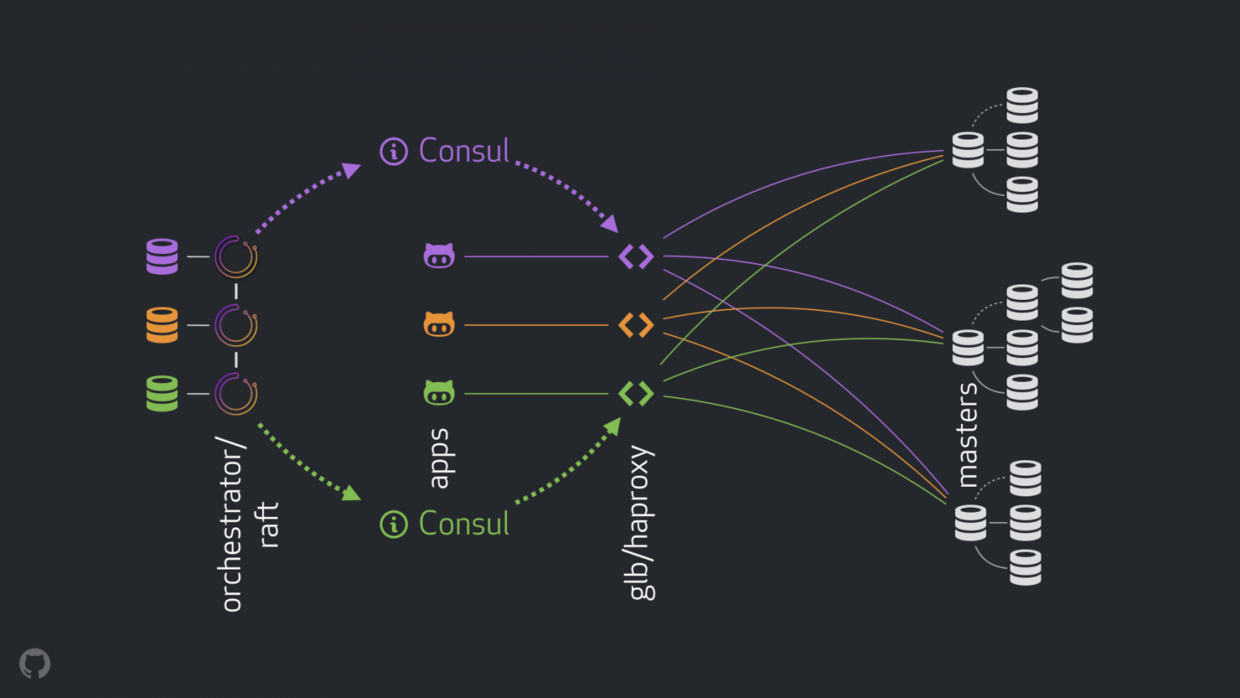

新策略可以改进,解决或者优化上面提到的问题。现在高可用的组成如下:

- orchestrator 执行检测和故障转移。如链接中描述的那样采用混合数据中心 orchestrator/raft 。

- Hashicorp 的 Consul 作为服务发现。

- GLB/HAProxy 作为客户端和写入节点之间的代理。 GLB 指导是开源的.

anycast作为网络路由。

新的结构移除了 VIP 和 DNS 。在引入更多的组件的同时,我们能够解藕这些组件,并且简化相关的任务,并易于利用可靠稳定的解决方案。详情如下。

正常过程

正常情况,应用通过 GLB/HAProxy 连接到写入节点。

应用感知不到 master 身份。之前,都是使用名称。例如 cluster1 的 master 是 mysql-writer-1.github.net。现在的结构中,这个名称被 anycast IP 取代。

通过 anycast,名称被相同的 IP 取代,但流量由客户端的位置来进行路由。特别的,当数据中心有 GLB 时,高可用负载均衡器部署在不同的盒子内。 通向 mysql-writer-1.github.net 的流量都被引流到本地的数据中心的 GLB 集群。这样所有的客户端都由本地代理服务。

在 HAProxy 顶层使用 GLB。HAProxy 拥有 写入池 :每个 MySQL 集群都有一个。每个池都有一个后端服务:集群 master 节点。数据中心的所有 GLB/HAProxy 盒子都有相同的池子,表示这些池子有相同的后端服务对应。所以如果应用期望写 mysql-writer-1.github.net,不同关心连接哪个 GLB 服务。它会导向实际的 cluster1 master 节点。

就应用连接 GLB ,发现服务而言,不需要重新发现。GLB 负责全部流量导向正确的目的地。

GLB 是怎么知道哪些服务是后端,以及如何告知 GLB 变化的呢?

Discovery via Consul

Consul 以服务发现解决方案而闻名,也提供 DNS 服务。 然而,在我们的解决方案中,我们将其用作高度可用的键值 (KV) 存储。

在 Consul 的 KV 存储中,我们写入集群主节点的身份。 对于每个集群,都有一组 KV 条目指示集群的主设备fqdn、端口、ipv4、ipv6。

每个 GLB/HAProxy 节点都运行 consul-template:一个监听 Consul 数据变化的服务(在我们的例子中:集群主数据的变化)。 consul-template 生成一个有效的配置文件,并且能够在配置更改时重新加载 HAProxy。

因此,每个 GLB/HAProxy 机器都会观察到 Consul 对 master 身份的更改,然后它会重新配置自己,将新的 master 设置为集群后端池中的单个实体,并重新加载以反映这些更改。

在 GitHub,我们在每个数据中心都有一个 Consul 设置,并且每个设置都是高度可用的。 但是,这些设置彼此独立。 它们不会在彼此之间复制,也不会共享任何数据。

Consul 如何获知变化,信息如何跨 DC 分发?

orchestrator/raft

我们运行一个 orchestrator/raft 设置: orchestrator 节点通过 raft 机制相互通信。 每个数据中心有一个或两个 orchestrator 节点。

orchestrator 负责故障检测和MySQL故障切换,以及将 master 的变更传达给 Consul 。 故障切换由单个 orchestrator/raft leader 节点操作,但是集群现在拥有新 master 的消息通过 raft 机制传播到所有 orchestrator 节点。

当 orchestrator 节点收到 master 变更的消息时,它们各自与本地 Consul 设置通信:它们各自调用 KV 写入。 具有多个 orchestrator 代理的 DC 将多次(相同)写入 Consul。

把流程组合起来

在 master 崩溃的情况下:

orchestrator节点检测故障.orchestrator/raftleader 节点开始恢复,一个数据服务器被更新为 master 。orchestrator/raft公告所有raft子集群节点变更了 master 。- 每个

orchestrator/raft成员收到一个 leader 节点 变更的通知。它们每个成员都把新的 master 更新到本地ConsulKV 存储中。 - 每个 GLB/HAProxy 都运行了

consul-template,该模版观察ConsulKV存储中的更改, 并重新配置和重新加载HAProxy。 - 客户端流量被重定向到新的 master 。

每个组件都有明确的责任归属,整个设计既是解耦的,又是简化的。orchestrator 不知道负载平衡器。 Consul 不需要知道消息的来源。代理只关心 Consul。客户端只关心代理。

此外:

- 没有更改要传播的DNS

- 没有TTL。

- 流没有和故障的 master 合作,它很大程度被忽略了。

其他详细信息

为了进一步保护流量,我们还有以下内容:

- HAProxy 配置了一个非常短的

hard-stop-after。当它重新加载写入器池中的新后端服务器时,它会自动终止与旧主服务器的任何现有连接。- 使用

hard-stop-after,我们甚至不需要客户的合作,这减轻了脑裂的情况。值得注意的是,这不是封闭的,并且在我们终止旧连接之前一段时间过去了。但是在某个时间点之后,我们会感到很舒服,并且不会期待任何令人讨厌的惊喜。

- 使用

- 我们并不严格要求 Consul 随时待命。事实上,我们只需要它在故障转移时可用。如果 Consul 发生故障,GLB 将继续使用最后的已知值进行操作,并且不会采取剧烈行动。

- GLB 设置为验证新提升的 master 的身份。与我们的 上下文感知 MySQL 池 类似,会在后端服务器上进行检查,以确认它确实是写入器节点.如果我们碰巧在 Consul 中删除了 master 的身份,没问题;空条目被忽略。如果我们在 Consul 中错误地写了一个非主服务器的名字,没有问题; GLB 将拒绝更新它并继续以最后一个已知状态运行。

我们将在以下部分进一步解决问题并追求 HA 目标。

Orchestrator/RAFT故障检测

orchestrator 使用 整体方法 来检测故障,因此非常可靠。我们没有观察到误报:我们没有过早的故障转移,因此不会遭受不必要的停机时间。

orchestrator/raft 进一步解决了完整的 DC 网络隔离(又名 DC 围栏)的情况。 DC 网络隔离可能会导致混乱:该 DC 内的服务器可以相互通信。是它们与其他 DC 网络隔离,还是其他 DC 被网络隔离?

在 orchestrator/raft 设置中,raft 领导节点是运行故障转移的节点。领导者是获得组中大多数人(法定人数)支持的节点。我们的协调器节点部署是这样的,没有一个数据中心占多数,任何 n-1 数据中心都可以。

在 DC 网络完全隔离的情况下,该 DC 中的 orchestrator 节点会与其他 DC 中的对等节点断开连接。因此,孤立 DC 中的 orchestrator 节点不能成为 raft 集群的领导者。如果任何这样的节点恰好是领导者,它就会下台。将从任何其他 DC 中分配新的领导者。该领导者将得到所有其他能够相互通信的 DC 的支持。

因此,发号施令的 orchestrator 节点将是网络隔离数据中心之外的节点。如果一个独立的 DC 中有一个主控,orchestrator 将启动故障转移,用其中一个可用 DC 中的服务器替换它。我们通过将决策委托给非隔离 DC 中的法定人数来缓解 DC 隔离。

更快的广而告之

通过更快地公布主要更改可以进一步减少总停机时间。如何实现这一点?

当 orchestrator 开始故障转移时,它观察可用于提升的服务器队列。理解复制规则并遵守提示和限制,它能够对最佳操作过程做出有根据的决策。

它可能认识到,一个可用于促销的服务器也是一个理想的候选人,例如:

- 没有什么可以阻止服务器的升级(用户可能已经暗示这样的服务器是首选的升级) ,以及

- 预计服务器能够将其所有兄弟服务器作为副本。

在这种情况下, orchestrator首先将服务器设置为可写的,然后立即宣传服务器的推广(在我们的例子里是写到 Consul KV 存储中) ,即使异步开始修复复制树,这个操作通常需要几秒钟。

很可能在 GLB 服务器完全重新加载之前,复制树已经完好无损,但是并不严格要求它。服务器很好接收写!

半同步复制

在 MySQL 的半同步复制中,主服务器不会确认事务提交,直到已知更改已发送到一个或多个副本。它提供了一种实现无损故障转移的方法: 应用于主服务器的任何更改要么应用于主服务器,要么等待应用于其中一个副本。

一致性伴随着成本: 可用性的风险。如果没有副本确认收到更改,主服务器将阻塞并停止写操作。幸运的是,有一个超时配置,超时后主服务器可以恢复到异步复制模式,从而使写操作再次可用。

我们已经将超时设置为一个合理的低值: 500ms。将更改从主 DC 副本发送到本地 DC 副本,通常也发送到远程 DC,这已经足够了。通过这个超时,我们可以观察到完美的半同步行为(没有退回到异步复制) ,并且在确认失败的情况下可以很轻松地使用非常短的阻塞周期。

我们在本地 DC 副本上启用半同步,在主服务器死亡的情况下,我们期望(尽管不严格执行)无损故障转移。完全直流故障的无损故障转移是昂贵的,我们并不期望它。

在尝试半同步超时的同时,我们还观察到了一个对我们有利的行为: 在主要失败的情况下,我们能够影响理想候选对象的身份。通过在指定的服务器上启用半同步,并将它们标记为候选服务器,我们能够通过影响故障的结果来减少总停机时间。在我们的实验中,我们观察到我们通常最终会得到理想的候选对象,从而快速地广而告之。

注入心跳

我们没有在升级/降级的主机上管理 pt-heart 服务的启动/关闭,而是选择在任何时候在任何地方运行它。这需要进行一些修补,以便使 pt-heart 能够适应服务器来回更改read_only(只读状态)或完全崩溃的情况。

在我们当前的设置中,pt-heart 服务在主服务器和副本上运行。在主机上,它们生成心跳事件。在副本上,他们识别服务器是read_only(只读)的,并定期重新检查它们的状态。一旦服务器被提升为主服务器,该服务器上的 pt-heart 就会将服务器标识为可写的,并开始注入心跳事件。

orchestrator 所有权委托

我们进一步 orchestrator:

- 注入 Pseudo-GTID ,

- 将提升的 master 设置为可写,清除其复制状态,以及,

- 如果可能,将旧主服务器设置为

read_only。

在所有的新 master 的基础上, 这减少了摩擦。一个刚刚被提升的 master 显然应该是有生命力,并且可以被接受的,否则我们不会提升它。因此,让 orchestrator 直接讲更改应用于提升的 msater 是有意义的。

orchestrator 所有权委托

我们进一步 orchestrator:

- Pseudo-GTID 注入,

- 将提升的 master 设置为可写,清除其复制状态,以及,

- 如果可能,将旧的 master 设置为

read_only。

在所有的新 master 的基础上, 这减少了摩擦。一个刚刚被提升的 master 显然应该是有活力,并且可以被接受的,否则我们不会提升它。因此,让 orchestrator 将更改直接应用于提升的 msater 是有意义的。

限制和缺点

代理层使应用程序不知道主服务器的身份,但它也掩盖了应用程序的主服务器的身份。所有主要看到的都是来自代理层的连接,我们会丢失关于连接的实际来源的信息。

随着分布式系统的发展,我们仍然面临着未处理的场景。

值得注意的是,在一个数据中心隔离场景中,假设主服务器位于隔离的 DC 中,该 DC 中的应用程序仍然能够写入主服务器。一旦网络恢复,这可能导致状态不一致。我们正在努力减轻这种分裂的大脑实施一个可靠的 STONITH 从内部非常孤立的 DC。和以前一样,在摧毁初选之前还需要一段时间,而且可能会有一段短时间的脑分裂。避免大脑分裂的操作成本非常高。

还存在更多的情况: 在故障转移时停止领事服务; 部分直流隔离; 其他情况。我们知道这种性质的分布式系统不可能堵住所有的漏洞,所以我们把重点放在最重要的案例上。

结论

我们的协调器/GLB/Consul 为我们提供了:

- 可靠的故障检测,

- 与数据中心无关的故障转移,

- 通常无损的故障转移,

- 数据中心网络隔离支持,

- 缓解裂脑(更多工作成果),

- 不依赖合作,

- 在大多数情况下,总中断时间在

10 and 13 seconds之间。- 在不常见的情况下,我们最多可以看到

20 seconds的总停机时间,在极端情况下则可以看到最多25 seconds的停机时间。

- 在不常见的情况下,我们最多可以看到

结论

业务流程/代理/服务发现范例在分离的体系结构中使用众所周知和受信任的组件,这使得部署、操作和观察变得更加容易,并且每个组件都可以独立向上或向下扩展。我们能不断的测试设置并寻求改进。

本文中的所有译文仅用于学习和交流目的,转载请务必注明文章译者、出处、和本文链接

我们的翻译工作遵照 CC 协议,如果我们的工作有侵犯到您的权益,请及时联系我们。

关于 LearnKu

关于 LearnKu