线程池爬取数据页数不对?

from concurrent.futures import ThreadPoolExecutor

import csv

from bs4 import BeautifulSoup

import requests

f = open('data.csv',mode='w')

csvwriter = csv.writer(f)

def download_one_page(url):

resp = requests.get(url)

res = BeautifulSoup(resp.text, 'html.parser')

div = res.find('div', class_="conter_con")

ulss = div.find_all('ul')

for ull in ulss:

title = ull.find('p', class_="title").text

img_url = ull.find('img').get('src')

data = []

data.append(title)

data.append(img_url)

csvwriter.writerow(data)

print(url,'提取完成')

if __name__ == '__main__':

with ThreadPoolExecutor(max_workers=10) as t:

for i in range(1,16):

t.submit(download_one_page,f"http://www.xinfadi.com.cn/newsCenter.html?current={i}")

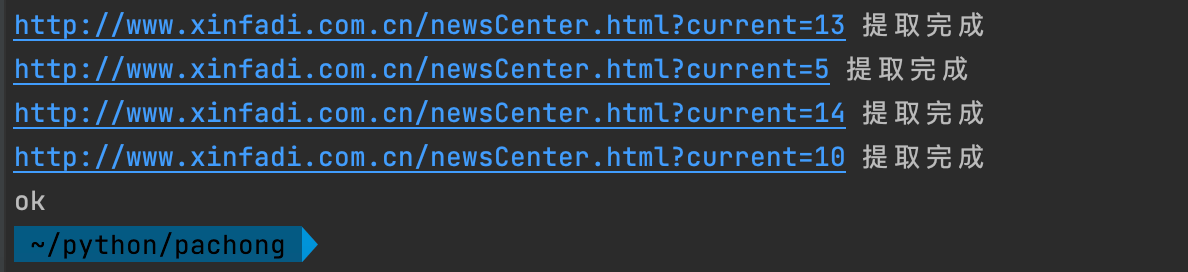

print('ok')请教下,为什么我只能爬取到4个页的数据?

关于 LearnKu

关于 LearnKu

看起来是其他页面爬取失败了,再爬取结果那可以判断下有没有成功

IMO, the script end before all your tasks done !

Not sure if it work well for following code