讨论数量:

用created_at减去对应日期4点的差值取绝对值之后,然后按绝对值小到大排序,这个数据量,感觉应该先冗余一个差值绝对值字段 :speak_no_evil:

select * from (SELECT

*, ABS(

(

UNIX_TIMESTAMP(created_at) - UNIX_TIMESTAMP(

CONCAT(

DATE_FORMAT(created_at, "%Y-%m-%d"),

" 04:00:00"

)

)

)

) AS math_sub,

DATE_FORMAT(created_at, "%Y-%m-%d") as days

FROM

table_name

ORDER BY

math_sub ASC) as b

GROUP BY b.daysSELECT

*

FROM

(

SELECT

*,

row_number() over (

PARTITION BY market_id,

date( created_at )

ORDER BY

ABS(

UNIX_TIMESTAMP( created_at ) - UNIX_TIMESTAMP(

CONCAT( DATE_FORMAT( created_at, '%Y-%m-%d' ), ' 04:00:00' )))

) AS rn

FROM

sentiments

WHERE

created_at >= TIMESTAMP ( '2014-01-01' )

AND created_at < TIMESTAMP ( '2014-02-01' )

AND market_id = 1

) d

WHERE

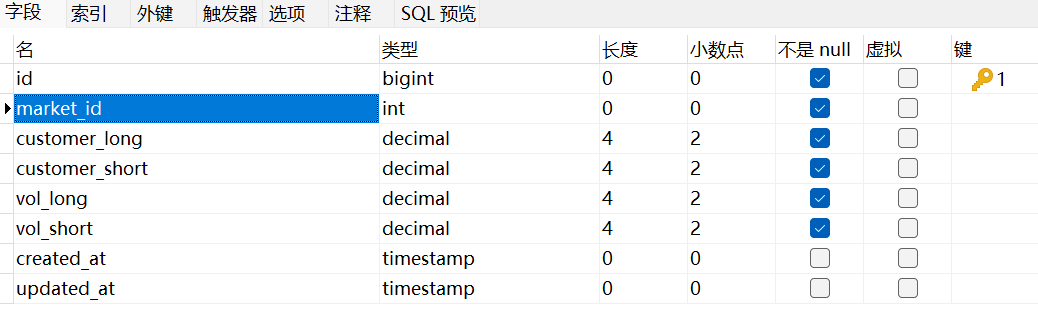

rn = 1建个 (market_id, created_at) 索引就能很快了?

手头没有 MySQL,拿最垃圾的 SQLite 测了测:

一亿数据量,抽取 (2023-01-01 至 2023-02-22) 每天中 created_at 最接近 04:00 的数据,只需 0.05 秒。

SQL 代码

-- 偷个懒,没建原始表,直接建索引

CREATE TABLE sentiments(

id INT,

market_id INT,

created_at TIMESTAMP,

PRIMARY KEY (market_id, created_at, id)

) WITHOUT ROWID;

-- 添加一亿条记录。字段取值:

-- market_id: [0, 1] 随机值

-- created_at: [1970-01-01 00:00:00, now) 随机值

INSERT INTO sentiments

SELECT

value,

abs(random() % 2),

abs(random() % (SELECT unixepoch()))

FROM generate_series(1, 100000000);

-- 计算

SELECT

id,

market_id,

date(created_at, 'unixepoch'),

time(max(created_at), 'unixepoch')

FROM sentiments

WHERE market_id = 1

AND created_at BETWEEN unixepoch('2023-01-01')

AND unixepoch('2023-02-22', '+1 day', '-1 second')

AND created_at % 86400 <= 4 * 3600

GROUP BY 2, 3;运行结果

id market_id date(created_at, 'unixepoch') time(max(created_at), 'unixepoch')

-------- --------- ----------------------------- ----------------------------------

76777678 1 2023-01-01 03:59:52

16087755 1 2023-01-02 03:59:19

79340726 1 2023-01-03 03:59:23

17833633 1 2023-01-04 03:59:24

49783397 1 2023-01-05 03:59:44

71230802 1 2023-01-06 03:59:56

60485644 1 2023-01-07 03:59:09

17387430 1 2023-01-08 03:59:59

47549039 1 2023-01-09 03:59:33

92300887 1 2023-01-10 03:59:07

89070679 1 2023-01-11 03:59:53

13268671 1 2023-01-12 03:59:53

40723986 1 2023-01-13 04:00:00

76719890 1 2023-01-14 03:59:38

26174302 1 2023-01-15 03:59:52

42960026 1 2023-01-16 03:58:39

19439814 1 2023-01-17 03:59:27

41320866 1 2023-01-18 03:58:41

47819221 1 2023-01-19 03:59:20

23953802 1 2023-01-20 03:59:17

34397037 1 2023-01-21 03:57:30

75175569 1 2023-01-22 03:59:58

81674069 1 2023-01-23 03:58:37

74445056 1 2023-01-24 04:00:00

62409959 1 2023-01-25 03:59:58

57855886 1 2023-01-26 03:59:50

89991143 1 2023-01-27 03:57:41

55270513 1 2023-01-28 03:59:01

37669119 1 2023-01-29 03:59:07

62745373 1 2023-01-30 03:56:43

98725596 1 2023-01-31 03:59:17

3922002 1 2023-02-01 03:59:46

1967569 1 2023-02-02 03:59:49

64607963 1 2023-02-03 03:58:23

51908674 1 2023-02-04 03:58:05

91748007 1 2023-02-05 03:58:39

90628926 1 2023-02-06 03:59:40

49714777 1 2023-02-07 03:58:27

71311919 1 2023-02-08 03:59:46

85980153 1 2023-02-09 03:59:48

10186467 1 2023-02-10 03:59:46

80455711 1 2023-02-11 03:58:49

17999943 1 2023-02-12 03:59:01

77599256 1 2023-02-13 03:59:58

86419432 1 2023-02-14 03:59:43

59121211 1 2023-02-15 03:59:59

76367224 1 2023-02-16 03:59:58

50988538 1 2023-02-17 03:59:17

95642148 1 2023-02-18 03:59:46

9183168 1 2023-02-19 03:59:48

35889212 1 2023-02-20 03:59:36

92482652 1 2023-02-21 03:59:37

94497566 1 2023-02-22 03:59:21

Run Time: real 0.057 user 0.031250 sys 0.000000

关于 LearnKu

关于 LearnKu

stackoverflow